Call on extensible RMI

An introduction to JERI

The ability to invoke methods on one Java object from objects residing in another JVM has been a standard Java feature since the JDK 1.1 release. The Remote Method Invocation (RMI) framework makes a remote method invocation follow a local method call’s syntax, while also explicitly acknowledging the semantic differences between local and remote object invocation. The combination of that simplicity and power has made RMI a popular distributed programming model.

Its popularity notwithstanding, RMI thus far has been limited to distributed systems that operate within the confines of a single administrative domain. The main reason for that limit: RMI lacked the security features required of wide-area distributed systems—especially systems that perform method invocations across the public Internet. Access to RMI calls’ customization features was not part of the standard RMI API, and existing RMI implementations did not provide programmatic access to securing RMI method invocations.

In addition, growing experience with RMI provided a wealth of new feature requests from RMI users. One common developer gripe was the need to explicitly generate stub classes for an RMI server with the rmic compiler instead of dynamically creating proxy classes.

The Sun Microsystems RMI team answered the need for a customization API and many RMI feature requests in the latest Java RMI release, Jini Extensible Remote Invocation (JERI). JERI provides programmatic access to each layer of an RMI call via an API and allows an RMI service deployer to choose the RMI implementation most suitable in a deployment scenario. JERI also defines a uniform mechanism to make remote objects available to answer remote method calls (object exporting), which was not standardized in prior RMI releases. The result of the new features is that RMI calls can now adhere to any security requirement. While distributed system security is not a novel subject, securing RMI calls poses a few special challenges due to RMI’s mobile code capability.

The case for secure mobile code

When RMI debuted, Web-scale remote method invocation was hardly a feature required of distributed systems. At that time, Web-based distributed computing amounted to simple interaction between Web browsers and Web servers in the form of CGI programs and HTTP servlets. As Web-aware distributed systems’ requirements grew over the last several years, the confines of HTTP POST and GET commands for distributed computing became apparent. In response, Simple Object Access Protocol (SOAP) emerged as an XML-based messaging layer on top of HTTP and other Internet protocols. XML-based Web services rely on the exchange of SOAP messages to facilitate distributed computing.

From a Java programmer’s viewpoint, SOAP and Java couldn’t be more different. SOAP defines a way to encode textual and binary data in XML data structures; Java code, on the other hand, can express not only data, but also algorithms that operate on data. While SOAP-aware APIs, such as SOAP with Attachments API for Java (SAAJ) and Java API for XML-based RPC (JAX-RPC), can make a SOAP-based method invocation syntactically appear as if that invocation was a simple Java method call, no SOAP-aware API can mask the semantic difference between Java and SOAP.

When a SOAP message traverses the network, that message is confined to carrying markup tags that might convey some semantic meaning at a distant network location. For SOAP to work, that distant network node must first be able to interpret the semantic markup contained in a SOAP message and, second, must have the available code to operate on the SOAP message data. Interpreting data elements and performing a set of agreed-upon operations on that data form the core of a SOAP-based Web service protocol. Current XML-based Web services define SOAP protocols for specialized problem domains such as Web service registries (Universal Description Discovery and Integration (UDDI) and ebXML) or electronic data interchange (EDI).

In contrast to SOAP, RMI relies on Java code as a unit of information exchange in a distributed system. As a programming language, Java encapsulates both data and code. That difference allows RMI system participants to share only the semantics of objects expressed in Java code. The protocols each object uses for network communication remains an implementation detail hidden from other network participants. That single requirement, in turn, raises the abstraction level of a distributed system from protocols to interactions between objects. That higher abstraction level lends network programming an object-oriented flavor and affords a close semantic match between local and network programming.

RMI heralded that simpler programming model by solving a crucial problem: automatically moving code on the network. To illustrate that achievement’s importance, consider the following two Java classes:

public interface Worker {

public Result processJob(Job job);

}

public interface Job {

public Object compute();

}

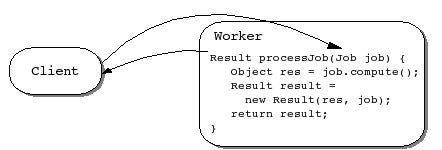

The first interface defines a worker in a generic compute server. When a Job instance is passed to Worker‘s processJob() method, Worker invokes the Job object’s compute() method and returns the results to the caller. Figure 1 illustrates that generic compute server.

If Worker‘s caller resides in the same JVM as the Worker, the caller can assume that the code for the parameter Job is available to the Worker object as well. In Java terms, the caller can assume the JVM that loaded the classes for Worker can also load Job‘s classes.

That assumption may no longer hold when Worker and its caller reside in different JVMs, possibly on different hosts. Since Job is a parameter in Worker‘s single method, the code for the Job interface must be available locally to Worker‘s JVM. However, code for a specific Job implementation might not be available locally to Worker. For example, a Job might be implemented as follows:

public class LongComputeJob implements Job {

private ParamOne firstParam;

private ParamTwo secondParam;

public LongComputeJob(ParamOne firstParam, ParamTwo secondParam) {

this.firstParam = firstParam;

this.secondParam = secondParam;

}

public Object compute() {

return CPUIntensiveAlgorithm.crunch(firstParam, secondParam);

}

}

That Job implementation defines four additional classes: LongComputeJob.class, ParamOne.class, ParamTwo.class, and CPUIntensiveAlgorithm.class. All four classes must be loaded in the JVM that executes Worker. The Java RMI runtime ensures that those classes automatically download as-needed from the network, if they are not already available on Worker‘s host. (See “Object Mobility in the Jini Environment” (JavaWorld, January 2001) for details of RMI’s mobile code feature.) Figure 2 illustrates an RMI system based on mobile code.

In most RMI implementations, code downloading and code execution occur without the possibility to programmatically intervene in these operations. That convenience plants the seeds of many dangers. Since a Worker has no way to fathom how a Job is implemented before it starts executing a newly downloaded Job, it has no way to control that job’s execution. Specifically, Worker cannot intercept a Job before invoking its methods or restrict access to the processJob() method based on the invoker’s identity.

The lack of an RMI server’s configuration possibilities leads either to ad-hoc solutions or an all-or-nothing security situation. In the latter case, if CPUIntensiveAlgorithm is implemented such that it instructs Worker to reformat the local hard disk, once Worker possesses sufficient privileges in its JVM, Worker‘s JVM will happily oblige in that act of malice. Because most RMI implementations do not provide a way to programmatically inspect and trace a remote method call, there is no way to grant different permissions based on the caller’s identity or the type of Job submitted. All of Worker‘s privileges are, in effect, given to any client invoking Worker.

That simplistic security model renders RMI unsuitable for Web-wide distributed computing where a system is open to potentially any client invocation. JERI alleviates these problems by giving API-level access to control all aspects of an RMI call. The new JERI APIs do not replace the existing RMI API. Rather, they complement it with a configuration layer to which developers can explicitly program. Because that configuration layer lets a developer extend and tailor every detail of an RMI call, the new RMI implementation is named Extensible Remote Method Invocation.

Sun developers first proposed their Extensible RMI to the Java Community Process (JCP) in the form of two Java Specification Requests (JSRs) in 2001. Due to the exigencies of JCP politics, the JCP Executive Committees did not approve those JSRs. As a result, Extensible RMI, regrettably, has not become a part of the Java 2 Platform, Standard Edition (J2SE). Instead, the Sun team decided to continue the work on Extensible RMI as the foundation of the Davis Jini community project. In 2002, the Jini community voted to make the Davis specifications official Jini standards. Sun’s Jini Starter Kit 2.0, released in June 2003, provides a full implementation of Extensible RMI, as well as core Jini services that use Extensible RMI. Based on Extensible RMI, the Jini 2.0 release features a fully customizable security framework.

Because of its initial release as the foundation of secure Jini, Extensible RMI is now called Jini Extensible Remote Method Invocation, or JERI. In the future, Extensible RMI will likely find its way into other RMI implementations unrelated to the Jini Starter Kit.

Client- and server-side RMI programming models

The mobile code semantics described in the previous section only form part of the RMI programming model. The RMI programming model’s client-side portion, in addition to mobile code, calls for remote objects to implement the java.rmi.Remote interface, and its remotely invocable methods to declare java.rmi.RemoteException. The RMI runtime serializes a remote method call’s parameter values and annotates the codebase where those parameter values’ classes were loaded from on that serialized object stream as a list of URLs. When a Remote object is passed as a parameter to an RMI call, the RMI runtime substitutes a special stub for that object. When transferred across the network, that stub forwards method invocations to the remote object.

For an object to accept method invocations arriving from the network, that object must be exported by the RMI runtime. Exporting a Remote object forms the core of the server-side RMI programming model. While the initial RMI specifications clearly defined the client’s programming model, they left considerable leeway about the server side. As a result, various RMI implementations export objects differently. For instance, if an object extends UnicastRemoteObject, the RMI runtime in the JDK will automatically export that object. How that exporting occurs, however, is beyond a developer’s control and is specific to an RMI implementation. By the same token, how an object is unexported depends on an RMI implementation as well.

A key JERI contribution is a new server-side programming model that defines a standard way to export and unexport an object. Rather than having the programmer hard-code the specifics of exporting and unexporting an object, the JERI server-side programming model relies on deployment-time configuration options that configure an Exporter instance for each remote object. When configuring an object’s Exporter, the configuration options can specify any restrictions, or constraints, to be placed on the exported object. Many of those constraints may be security related: For instance, a constraint might require that a client authenticate itself before invoking the server’s remotely accessible methods.

Configuring an exporter

An object that implements the net.jini.config.Configuration interface represents an application’s configuration. For example, in the case of the RMI compute server, a Configuration would control how an exporter exports a worker. Instead of directly instantiating a Configuration, an application obtains its configuration from a ConfigurationProvider. A configuration provider can retrieve an application’s configuration in any manner. The default configuration provider for Jini and JERI applications relies on a ConfigurationFile class, which, in turn, populates the configuration data based on the contents of a configuration file.

A configuration consists of configuration entries. Each entry is identified by the component that the entry configures and the entry’s name. When obtaining a configuration entry from a configuration, optionally a default value can be specified as well. The following code snippet shows how to obtain a configuration for the compute server’s worker, how to use that configuration to retrieve an exporter, and, finally, how to export the worker with that exporter:

public class WorkerImpl implements Worker {

private Exporter xp;

void initWorker(String[] providerArgs) throws Exception {

Configuration cfg = ConfigurationProvider.getInstance(providerArgs);

xp = (Exporter)cfg.getEntry("compute.WorkerImpl",

"exporter",

Exporter.class);

Remote workerProxy = xp.export(this);

}

void shutDownWorker() {

xp.unexport(true);

}

public Result submitJob(Job job) throws RemoteException {

Object obj = job.compute();

...

return result;

}

}

While XML is currently the favorite file format for system configuration files, JERI configuration files follow a syntax that is a subset of the Java programming language. For instance, a configuration file for the compute server worker might contain the following:

import net.jini.jrmp.*;

worker.WorkerImpl {

exporter = new JrmpExporter();

}

Passing the name of that file as an argument to initWorker() will cause the compute server worker to be exported via the JRMP exporter, an exporter that follows the original RMI Java Remote Method Protocol (JRMP). Another configuration file might look as follows:

import net.jini.iiop.*;

worker.WorkerImpl {

export = new IiopExporter();

}

Passing this file’s name will result in the worker listening for incoming calls via RMI-IIOP (Internet Inter-ORB Protocol). Or, yet another configuration might specify the following:

import net.jini.jeri.*;

import net.jini.jeri.http.*;

worker.WorkerImpl {

exporter = new BasicJeriExporter(HttpServerEndpoint.getInstance(9999));

}

This last file will cause the worker to use the Jini extensible remote invocation over HTTP, listening on port 9999. As these examples show, the protocol an RMI server uses is determined entirely by which exporter exports that object. Switching from one protocol to another does not require coding changes.

Configuring the RMI protocol stack

Exporting an object through a JERI Exporter allows control over every aspect of an RMI method invocation. To understand the various configuration options, it is helpful to follow the path of an RMI call through the RMI protocol stack. Figure 3 illustrates how a method call traverses the various RMI layers.

A remote object’s proxy implements the client-side of the RMI protocol stack; the server side is executed on the remote object server. The RMI exporting mechanism is responsible for creating a proxy capable of executing the client side of the protocol steps. JERI allows customization of each protocol layer.

The client’s invocation layer initiates a remote method call. When the client invokes a method on the remote object’s proxy, the proxy’s invocation layer dispatches that method invocation to its InvocationHandler. When that call arrives on the server, an InvocationDispatcher receives that call. The dispatcher unmarshalls the remote invocation’s object and parameters, finds the object that the call is intended for, invokes that object, and finally marshalls any return values from that object back so that those results can be sent to the client.

The object identification layer tracks exported objects. That layer is also responsible for managing several aspects of exported objects, such as distributed garbage collection. On the client side, an ObjectEndpoint identifies a remote object. On the server, a RequestDispatcher is responsible for dispatching inbound requests based on an object’s identity.

The transport layer is responsible for encoding the remote method call in a transport-specific format and sending that method call across the network. Endpoint encapsulates the information necessary to send a request to the remote server. Endpoint instances are created by the server’s ServerEndpoint at the time the server is exported. An endpoint is specific to the kind of network transport used; for instance, TcpEndpoint, HttpEndpoint, or SslEndpoint.

The JERI APIs provide several default implementations of each of the three RMI layers. In addition, JERI provides classes to aid in the implementation of any custom protocol layer. The only requirement from a programmer is that corresponding client and server protocol layers remain compatible.

Customizing an RMI call

To make RMI customization concrete, this article’s concluding example implements a simple RMI compute server that intercepts method invocations from clients before executing the requested computation. Intercepting a compute server job submission allows the server to determine if, for instance, the server has sufficiently low load average to accept the job. A busy server could reject the method call, causing the client to attempt the job submission at another worker.

While it is possible to code that logic directly into the worker’s implementation, JERI allows that logic to become a deployment-time decision. Instead of making changes to the server’s code, a custom RMI invocation layer can intercept a method call and decide whether that call should proceed.

In order to intercept incoming method calls on the server, a custom invocation layer must be installed when you export the remote compute server worker. The net.jini.jeri package contains simple implementations of the main interfaces in the RMI protocol stack. An InvocationLayerFactory is responsible for creating a remote object’s proxy and the object’s invocation dispatcher when the object is exported.

BasicILFactory is an invocation layer factory implementation that installs the invocation layer objects for both the client and server sides. On the client side, BasicILFactory installs an instance of BasicInvocationHandler; on the server, it installs a BasicInvocationDispatcher. Using BasicILFactory, therefore, affords you the opportunity to intercept method invocations at either the client or the server sides (or both). This example focuses on the server side only.

To intercept method invocations on the server side, you must create a customized BasicInvocationDispatcher and install that class with a specialized instance of BasicILFactory.

BasicInvocationDispatcher is responsible for handling incoming method calls, and it presents several opportunities to intercept an incoming call before the call finally dispatches to the appropriate object. One such point occurs during the unmarshalling of an incoming call’s stream:

protected Object[] unmarshallArguments(Remote impl,

Method method,

ObjectInputStream in,

Collection context) throws

IOException, ClassNotFoundException {

//Determine if call should proceed

boolean shouldProceed = determineIfCallShouldProceed();

if (shouldProceed) {

super(impl, method, in, context);

} else {

throw new SecurityException("Server can't execute request at this time.");

}

}

This method allows the unmarshalling to proceed only if the server determines it can execute that call. This method must be declared in a BasicILFactory subclass.

Once you have a custom invocation layer factory, you can extend one of the above configuration files as follows:

import net.jini.jeri.*;

import net.jini.jeri.http.*;

worker.WorkerImpl {

exporter = new BasicJeriExporter(

HttpServerEndpoint.getInstance(9999),

new CustomizedILFactory());

}

In this example, CustomizedILFactory refers to the customized BasicILFactory created above.

Passing this last configuration file to the compute server results in the RMI invocation layer intercepting the incoming method invocations. Altering the compute server’s behavior in this manner does not require coding changes. While this example is very simple, it paves the way to more involved customizations. For instance, the compute server could require each client to authenticate itself before accepting that client’s request. Such a requirement could also be satisfied without making programming changes to the compute server’s worker.

Flexible configuration

With its new configuration mechanism, JERI allows you to tailor RMI implementations to specific requirements. Because every layer of the RMI protocol stack can be customized and swapped at deployment time, JERI relieves developers of the burden of coding deployment-specific aspects into remote object implementations and their proxies. RMI protocol stack customization also allows fine-grained security, since security decisions can be made at every step of an RMI call.

While JERI is currently available only as part of the Jini 2.0 release, it is quite possible that JERI’s flexibility will also find numerous fans outside the Jini community. That would certainly encourage application server vendors to support creation and management of JERI configurations with easy-to-use management tools.